Database practitioners are always in pursuit of new ways to streamline operating costs. The total cost of database ownership can be broken down essentially into the license cost, the storage cost, and the compute cost. There are dozens of other soft and hard costs that are well worth considering and are covered in depth in this cost of ownership report, but the focus in this blog is the reduction of storage costs.

If you’re using S3, and you’d like to reduce costs with nearly no effort, this blog is for you.

The cost of cloud storage

Let’s use an oversimplified example to capture the cost breakdown: if your total cost of database ownership is $100,000 then roughly $50,000 is for the platform expenses and $50,000 is for the database license. Of those platform expenses roughly $25,000 will be storage. Usually around 20% of that storage is going to be nearline (or “cold”) storage, which adds up to $5,000.

If you want to pay less, speed up RPO/RTO, and never pay egress costs you need to look at Wasabi.

What is Wasabi Cloud Storage?

Wasabi is a one size fits all cloud storage technology that allows users to affordably store a nearly infinite amount of data. Here is what you need to know about Wasabi:

- AWS S3 API compatible

- x6 times faster than AWS S3, x8 times faster than Google storage

- 80% less than Amazon S3

- No egress costs

- Available globally

- Accessible from your CockroachDB cluster running on-premise, or in your preferred public cloud, or in a multi-cloud or hybrid environment

If you are backing up your CockroachDB cluster to Amazon S3 today, the simple truth - it can be faster and 5x cheaper with Wasabi than it is with Amazon S3. All you need to do is point to a Wasabi bucket instead of an Amazon S3 bucket. With practically no changes to the backup/restore commands, only a different location URI.

If you are currently operating in a public cloud or on-premises, storing your CockroachDB backup offsite in a Wasabi bucket will make your disaster recovery defenses stronger, and improve backup economics as well. To backup to a Wasabi storage bucket, follow Amazon S3 examples in the CockroachDB docs.

How to use Wasabi Cloud Storage with CockroachDB

Backups and restores are the cornerstones of your disaster recovery planning and implementation. However, there are more potential use scenarios leveraging the Wasabi cloud storage:

- Disaster Recovery

- CockroachDB Cluster Migration

- CockroachDB Cluster Cloning

- Data Import - Export

CockroachDB Backup and Restore for Disaster Recovery

CockroachDB has been validated for use with Wasabi as a cloud storage target for all Backup/Restore types of a cluster, database, or table. CockroachDB natively supports AWS S3-compatible Wasabi storage without any other product or service dependency.

Cockroach Labs recommends enabling Wasabi S3 Object Lock to protect the validity of CockroachDB backups.

Because Wasabi offers a single class of storage that provides better than Amazon S3 performance at lower than Amazon Glacier prices, the S3_STORAGE_CLASS parameter in the URI doesn’t apply to Wasabi storage backups.

A user with sufficient database backup / restore privileges and sufficient storage permissions can initiate/configure/schedule backup/restore operations via SQL statements using any of the supported database client interfaces or tools.

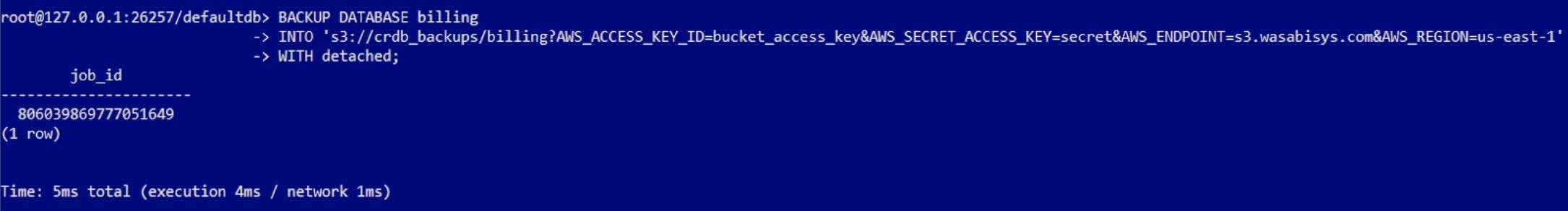

For example, a one-time full backup can be issued with a following statement

BACKUP DATABASE billing

INTO 's3://crdb_backups/billing?AWS_ACCESS_KEY_ID=bucket_access_key&AWS_SECRET_ACCESS_KEY=secret&AWS_ENDPOINT=s3.wasabisys.com&AWS_REGION=us-east-1'

WITH detached;

Using the CockroachDB interactive SQL client it would look like this:

CockroachDB Cluster Migration

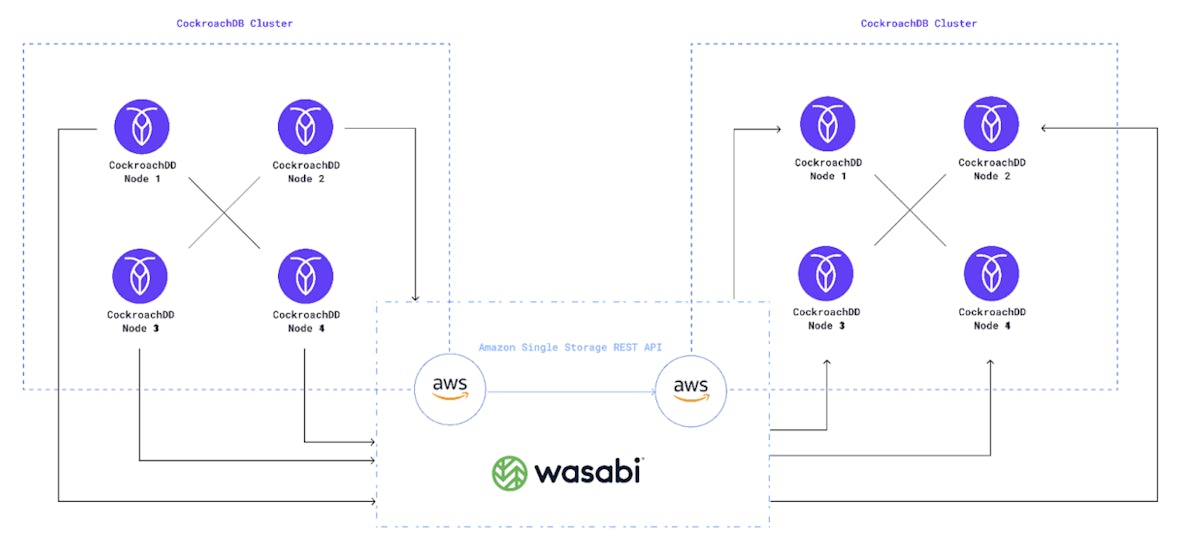

With the current surge of interest in cloud databases, migration requests from CockroachDB On-Premises or Self-Hosted to CockroachDB Dedicated (our managed service) are common.

In our practice, one of the simplest ways to migrate a homogeneous CockroachDB environment, for example your CockroachDB cluster into CockroachCloud, is by leveraging successive backup-restore to migrate the data and metadata using a commonly accessible staging storage like a Wasabi bucket. While all migrations have custom development elements due to specific requirements, the high-level blueprint covers these points:

- The actual application switchover from the old to the new cluster can be very quick, within a couple of seconds.

- You can achieve practically uninterrupted reads (delayed only during the few seconds it takes to switchover) with relatively simple changes in the application logic.

- The writes need to be suspended for the elapsed time of the successive backup-restore, which typically takes minutes to tens of minutes; during that period the writes can be buffered and then replayed against the new cluster to ensure no data loss during migration.

For heterogeneous migrations, such as PostgreSQL or MySQL to CockroachDB, a temporary Wasabi storage bucket could provide a cost-effective staging for data files for export-import style migrations. CockroachDB is happy to IMPORT data directly from a Wasabi bucket. Here is an example of importing a complete database (schema and data) from a PostgreSQL database dump:

IMPORT PGDUMP 's3://migration/pgdump.sql? AWS_ACCESS_KEY_ID=bucket_access_key&AWS_SECRET_ACCESS_KEY=secret&AWS_ENDPOINT=s3.wasabisys.com&AWS_REGION=us-east-1'

WITH ignore_unsupported_statements;

Or an example of importing a single table from a MySQL database dump:

IMPORT TABLE customers FROM MYSQLDUMP 's3://migration/customers.sql? AWS_ACCESS_KEY_ID=bucket_access_key&AWS_SECRET_ACCESS_KEY=secret&AWS_ENDPOINT=s3.wasabisys.com&AWS_REGION=us-east-1'

WITH skip_foreign_keys;

CockroachDB Cluster Cloning

From time to time, you need to create a new cluster with a dataset and metadata copied from another cluster. For example, you may need to create a point-in-time copy of a production CockroachDB database to seed a staging environment. Or create a database clone for idempotent QA regression testing.

Leveraging backups for cluster cloning is possible because CockroachDB allows it to restore into an arbitrary different cluster “shape” from the source cluster in a backup, as long as the clone cluster has sufficient storage capacity.

Thus, a cloning operation effectively equates to a restore. Perhaps from a recently-taken, regularly-scheduled full backup.

Staging Storage for CockroachDB Data Export-Import

You may have implemented some of the data exchanges in your environment with CockroachDB bulk data exports-imports. Which requires a staging area for the data files.

A Wasabi storage bucket could facilitate a cost-effective staging for CockroachDB exports and imports, for example:

EXPORT INTO CSV 's3://staging/customers.csv? AWS_ACCESS_KEY_ID=bucket_access_key&AWS_SECRET_ACCESS_KEY=secret&AWS_ENDPOINT=s3.wasabisys.com&AWS_REGION=us-east-1'

WITH delimiter = '|' FROM TABLE bank.customers;

Final Thoughts

Total cost of ownership is under the spotlight right now. Businesses are looking for ways to reduce costs while continuing to innovate. For the specific challenge of reducing cloud storage costs the switch from S3 to Wasabi is worth an investigation.

If you’re interested in learning about more strategies for reducing total cost of ownership without compromising on innovation you can check out the report I mentioned earlier or take a look at this webinar we recently produced. In this session you’ll learn about the efficiences of running a database as a service including the value of separating database operations from use and how incredibly fast you can go-to-market with demanding workloads.

I also recommend these two legacy database migration use cases about reducing costs:

• A sports betting app saved millions switching from PostgreSQL to CockroachDB

• An electronics giant saved millions after migrating from MySQL to CockroachDB

Happy exporting. Or importing. Or both!